Abstract

The absence of head-to-head trials is a common challenge in comparative effectiveness research and health technology assessment. Indirect cross-trial treatment comparisons are possible, but can be biased by cross-trial differences in patient characteristics. Using only published aggregate data, adjustment for such biases may be impossible. Although individual patient data (IPD) would permit adjustment, they are rarely available for all trials. However, many researchers have the opportunity to access IPD for trials of one treatment, a new drug for example, but only aggregate data for trials of comparator treatments. We propose a method that leverages all available data in this setting by adjusting average patient characteristics in trials with IPD to match those reported for trials without IPD. Treatment outcomes, including continuous, categorical and censored time-to-event outcomes, can then be compared across balanced trial populations.

The proposed method is illustrated by a comparison of adalimumab and etanercept for the treatment of psoriasis. IPD from trials of adalimumab versus placebo (n = 1025) were re-weighted to match the average baseline characteristics reported for a trial of etanercept versus placebo (n = 330). Reweighting was based on the estimated propensity of enrolment in the adalimumab versus etanercept trials. Before matching, patients in the adalimumab trials had lower mean age, greater prevalence of psoriatic arthritis, less prior use of systemic treatment or phototherapy, and a smaller mean percentage of body surface area affected than patients in the etanercept trial. After matching, these and all other available baseline characteristics were well balanced across trials. Symptom improvements of ≥75% and ≥90% (as measured by the Psoriasis Area and Severity Index [PASI] score at week 12) were experienced by an additional 17.2% and 14.8% of adalimumab-treated patients compared with the matched etanercept-treated patients (respectively, both p < 0.001). Mean percentage PASI score improvements frombaseline were also greater for adalimumab than for etanercept at weeks 4, 8 and 12 (all p < 0.05). Matching adjustment ensured that this indirect comparison was not biased by differences in mean baseline characteristics across trials, supporting the conclusion that adalimumab was associated with significantly greater symptom reduction than etanercept for the treatment of moderate to severe psoriasis.

Similar content being viewed by others

With an increasing demand for comparative effectiveness research (CER), there is often interest in comparing alternative treatments that have not been directly compared in a head-to-head randomized trial. For example, one may wish to compare drug A with drug B when randomized trials have only compared each with drug C (e.g. placebo). To make a reliable indirect comparison of drug A versus drug B across the separate trials, it is desirable that the trials be as similar as possible.

The most widely used methods for indirect comparison rely exclusively on aggregate data, such as published trial results, and use common comparator arms (e.g. drug C) to adjust for any differences between trials. For example, if one trial provides the relative response rate of drug A versus drug C (RRA:C) and another trial provides the relative response rate of drug B versus drug C (RRB:C), the relative response rate of drug A versus drug B is estimated as RRA:C/RRB:C. This approach, introduced by Bucher et al.,[1] can incorporate multiple trials per treatment, and has been extended to simultaneously compare multiple treatments linked through a network of indirect comparisons,[2] and to adjust for aggregate baseline characteristics in a meta-regression framework.[3–5] Several comprehensive and detailed reviews of these and other methods for indirect treatment comparisons have been published.[5,6]

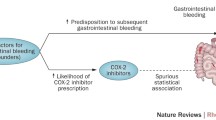

Although aggregate data are widely available, indirect comparisons that rely exclusively on aggregate data are subject to well appreciated limitations, including the potential for bias due to differences between trial populations.[1,5–9] For example, if baseline disease severity was greater for patients in the trial of drug A versus C compared with the trial of drug B versus C, an indirect comparison of drug A versus B across trials could be biased, even with treatment effects compared relative to drug C. Table I provides an extreme hypothetical example of such bias. An adjusted indirect comparison based on aggregate data, in the upper portion of table I, suggests that drug A is inferior to drug B, with a lower response rate by 10 percentage points. However, when individual patient data (IPD) are used to stratify the comparison by baseline disease severity, as in the lower portion of table I, drug A is revealed to be consistently superior to drug B, with a greater response rate by 10 percentage points in both strata. The false conclusion drawn from the aggregate data is an example of ecological bias, a well appreciated limitation of aggregate data that can be difficult or impossible to mitigate.[10]

If IPD were available from all trials of interest, biases stemming from differences between trial populations could be mitigated by regression adjustment.[11–15] However, IPD are rarely available for all trials of interest, since the sharing of clinical data is often limited by privacy or commercial concerns.[5,16,17] A middle ground between having full IPD and having only aggregate data is more common: a researcher could have access to IPD for trials involving one drug of interest, say drug A, but access to only aggregate data for trials of comparators such as drug B. Researchers who conduct their own trials, or who collaborate or are affiliated with trial sponsors, can often find themselves in this setting. For example, indirect comparisons are often helpful when new drugs are compared with existing therapies to inform healthcare payers and health technology assessment agencies. Since manufacturers often contribute to the development of these comparisons, IPD could be available from sponsored trials of their own drugs. However, despite widespread interest in using all available data in such comparisons,[18] there are few published methods or applications that combine IPD with aggregate data for indirect comparisons, leaving IPD as a largely untapped resource in this setting.[16]

Methods for combining IPD with aggregate data for direct comparison meta-analyses of head-to-head trials provide valuable opportunities for subgroup analyses, investigations of treatment-effect modification and reductions of effect heterogeneity,[19,20] and have been systematically reviewed.[21] In comparison, methods for combining IPD with aggregate data for indirect comparisons seem largely unexplored. Given the biases that can arise from imbalances in patient characteristics between trials, methods that can use even partial IPD to improve balance could expand the applicability of indirect comparisons.

This article proposes a matching approach to leveraging IPD for indirect treatment comparisons. Given IPD from trials of one treatment, we propose re-weighting those patients such that their average baseline characteristics match those reported for trials of the alternative treatment. Treatment outcomes can then be compared between balanced populations. The matching is accomplished by re-weighting patients in the trials with available IPD by their odds of having been enrolled in the trials without available IPD. The proposed method is motivated and illustrated through an example application to the comparative effectiveness of adalimumab and etanercept for the treatment of psoriasis.

1. Example Application

Psoriasis is a chronic systemic illness affecting 2.1% of American adults,[22] with about 150 000 new cases diagnosed each year.[23] Symptoms include itchy, painful plaques on the body surface and substantial impairment of health-related quality of life.[23–25] In the past 6 years, psoriasis treatment has been revolutionized by targeted biologic therapies. Two of the most commonly used are the tumour necrosis factor (TNF) antagonists adalimumab and etanercept. US FDA approval for the treatment of moderate to severe psoriasis was granted to etanercept in 2004 and to adalimumab in early 2008. Both drugs have demonstrated superiority over placebo (and over methotrexate in the case of adalimumab) for the reduction of psoriasis symptoms in randomized trials.[26–30] The primary outcome in these trials has been the Psoriasis Area and Severity Index (PASI), which measures the extent and severity of psoriasis skin symptoms. To date, no randomized, head-to-head clinical trial has compared adalimumab with etanercept for the treatment of psoriasis.

The goal of the current study is to make an indirect comparison between adalimumab and etanercept for the treatment of moderate to severe psoriasis in North America. The methods described in this study were developed to make use of all data available to the authors, which included IPD from adalimumab clinical trials but only published aggregate data from etanercept trials.

1.1 Methods

The research approach was divided into three steps. First, a detailed literature review (see appendices Supplemental Digital Content 1, http://links.adisonline.com/PCZ/A85) was conducted to identify a suitable collection of clinical trials for an indirect comparison of adalimumab versus etanercept. Second, patient baseline characteristics available for matching across trials were identified. Third, the adalimumab and etanercept trial populations were matched according to averages of the available baseline characteristics, and treatment outcomes were compared for adalimumab versus etanercept across the balanced trial populations. In presenting these steps, each subsection below begins by summarizing the general considerations that would apply to any application of the proposed methods, and then describes their particular application to the comparison of adalimumab versus etanercept.

1.1.1 Sample Selection

When implementing the matching method that will be described below, it is desirable to have inclusion/exclusion criteria in the trials with IPD that are equally or more inclusive than those in the trials without IPD. For example, if the trial with IPD enrolled only patients aged <65 years, comparison with aggregate outcomes from a trial of patients aged <40 years would be straightforward (patients aged ≥40 years could be removed from the IPD). However, comparison with aggregate data from a trial with many patients aged >65 years could be problematic, as it would not be possible to fully balance the age distribution between trials. It is also desirable that the included trials do not have protocol differences that could impact on outcomes and cannot be balanced by matching (e.g. one trial is double blind and the other is open label). Finally, the trials should report comparable outcome measures assessed in a consistent fashion across studies.

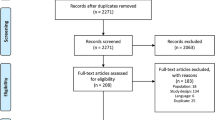

For the comparison of adalimumab and etanercept, IPD were available for all randomized, placebo-controlled trials of adalimumab for the treatment of psoriasis sponsored by Abbott Laboratories. Aggregate results for randomized, placebo-controlled trials of etanercept for the treatment of psoriasis were obtained from the published literature. Study design parameters and population characteristics (e.g. length of follow-up, schedule of efficacy assessments, inclusion and exclusion criteria and reported baseline characteristics) were reviewed. The trial reported by Leonardi et al.[28] was selected for etanercept. The trials reported by Menter et al.[27] (REVEAL; Randomized controlled EValuation of adalimumab Every other week dosing in moderate to severe psoriasis triAL) and Gordon et al.[30] (M02-528) were selected for adalimumab. Patients from these adalimumab trials were pooled together and then subjected to the same inclusion/exclusion criteria as those in Leonardi et al.[28] to form the REVEAL/528 study population. Outcomes available from all three trials included the percentages of patients who experienced a ≥75% or ≥90% improvement in the PASI score at week 12 relative to baseline (PASI 75 response and PASI 90 response, respectively), and the mean percentages of change in PASI from baseline to weeks 4, 8 and 12. Appendix A in the Supplemental Digital Content describes the sample selection process for the current study in further detail.

1.1.2 Selection of Baseline Characteristics for Matching

Mean baseline characteristics can be matched using the methods proposed here if they are reported for the trials with aggregate data, and available and consistently defined in the trials with IPD. In addition to matching mean baseline characteristics, it will often be possible to further match the standard deviations of continuous baseline variables, which are often reported in accordance with guidelines.[31] For example, given the baseline mean and standard deviation of age, it is straightforward to compute the mean of squared age, which can then be treated as a separate mean baseline characteristic for matching. Reported medians or other percentiles can also be matched. For example, if a trial reports a median baseline age of 32.5 years, this can be interpreted as a binary characteristic, age >32.5 years, with a frequency of 50%.

All baseline characteristic means and standard deviations reported in Leonardi et al.[28] and available in REVEAL/528 were selected for matching and are listed in table II. Only the physician’s and patient’s global assessment scores were not matched, since Leonardi et al.[28] and REVEAL[27] used 6-point scales, whereas M02-528 used a 7-point scale.[30]

1.1.3 Matching Trial Populations

To adjust for differences in baseline characteristics between trials, we propose re-weighting individual patients from the trials with available IPD to match the mean baseline characteristics reported for the trials with only aggregate data. To simplify the initial presentation of the method, we temporarily ignore the placebo arms for each trial to focus on matching baseline characteristics, and we first consider the case in which only two trials are available: one with IPD and one without. The placebo arms contain valuable information and will be incorporated into the final procedure, which is then easily extended to include multiple trials for each treatment.

Without placebo arms, each trial corresponds to a unique treatment, and a study patient can be characterized by the random triple (X, T, Y), where X is a vector of baseline characteristics (e.g. age, sex, baseline disease severity, etc.), Tindicates the treatment received (e.g T = 0 for adalimumab and T = 1 for etanercept), and Y indicates the outcome of interest (e.g. a binary response indicator or a continuous outcome). The observed data are based on realizations of the random triple (xi, ti, yi) i = 1, …, n, but we observe the IPD (xi, ti, yi) only when ti = 0. When ti = 1, the IPD (xi, ti, yi) are not observed individually, but the mean baseline characteristics\({\bar x_1}\) and mean outcome\({\bar y_1}\) are observed (e.g. in the published literature).

Given these observed data, the causal effect of treatment T = 0 versus T = 1 on the mean of Y can be estimated as shown in equation 1:

where the weight\({{\rm{w}}_{\rm{i}}} = {{\Pr ({{\rm{T}}_{\rm{i}}} = 1\;{\rm{l}}{{\rm{x}}_{\rm{i}}})} \over {\Pr ({{\rm{T}}_{\rm{i}}} = 0\;{\rm{l}}{{\rm{x}}_{\rm{i}}})}}\) is the odds that patient i receives treatment T = 1 versus T = 0 (i.e. enrols in trial 1 vs trial 0) given baseline characteristics xi. Thus, the patients receiving treatment T = 0 are re-weighted to match the distribution of patients receiving T = 1: patients more likely to have received T = 1 versus T = 0 will be up-weighted to compensate for their under-representation in the T = 0 sample; patients less likely to have received T = 1 versus T = 0 will be down-weighted to compensate for their over-representation in the T = 0 sample. This estimator has been used previously in the setting where observational data are fully available for individual patients receiving either treatment.[32]

To apply this estimator, we must first estimate wi for each patient with ti = 0 from the observed data. As in matching methods based on propensity scores,[33] the wi may be assumed to follow the logistic regression model shown in equation 2:

However, in our setting without IPD when ti = 1, the usual maximum likelihood approach cannot estimate the parameters of this model. A key feature of our proposed method is the use of a method of moments estimate for β, which is fully described in Appendix B of the Supplemental Digital Content. Plugging estimated weights based on the method of moments estimate\(\hat \beta \) into equation 1 gives the matching-adjusted treatment effect estimate shown in equation 3:

Thus, the final estimate adjusts the patient population observed to receive treatment T = 0 by re-weighting each individual patient by the estimated odds of receiving treatment T = 1 vs T = 0. Appendix B further shows that (i) these weights exactly match mean baseline characteristics between treatments (or trials); (ii) placebo-arm data can be incorporated by applying a standard adjusted indirect comparison[1] after first matching baseline characteristics across trials; and (iii) the estimate\(\hat \theta \), and statistical inferences for\(\hat \theta \), can be obtained using SAS.

When multiple trials are available for each treatment versus placebo, the trials for each treatment can first be pooled together as in Bucher et al.[1] The matching-adjusted indirect comparison described above can then be applied to the pooled IPD and aggregate data. If important trial-level factors (e.g. double-blind vs open-label treatment) differ across trials, these factors (and potentially their interactions with other baseline characteristics) can be included as baseline characteristics for matching in the above framework, as long as all factor levels in the trials with aggregate data are represented in the trials with IPD.

The matching-adjusted indirect comparison estimator was applied to the comparison of adalimumab and etanercept in terms of binary outcomes (PASI 75 and PASI 90 responses at week 12) and continuous outcomes (the mean percentage change in PASI score from baseline to weeks 4, 8 and 12). Mean percentage PASI changes in Leonardi et al.[28] were extracted from figure 1a of that publication, and the standard errors reported for this measure at week 12 were assumed to apply to weeks 4 and 8 (for which standard errors were not reported).

1.2 Results

After selecting patients from REVEAL[27] and M02-528[30] using the inclusion and exclusion criteria described in Leonardi et al.,[28] 1025 patients were available for analysis in the combined REVEAL/528 population (58 patients from M02-528 and 967 from REVEAL): 678 randomized to adalimumab and 347 randomized to placebo. Before further matching, patients in REVEAL/528 had a lower mean age, a greater prevalence of psoriatic arthritis, less prior use of systemic treatment or phototherapy, and a smaller mean percentage of body surface area affected than patients in Leonardi et al.[28] (table II). In an unadjusted comparison of outcomes, the observed PASI 75 and PASI 90 response rates were higher in REVEAL/528 than in Leonardi et al.[28] on both the active treatment and the placebo arms (table III).

The matching procedure ensured that the means and standard deviations of all available baseline characteristics were exactly matched between the REVEAL/528 and Leonardi et al.[28] trial populations (table II). The effective sample size of the REVEAL/528 population after matching was 591 patients. Based on the re-weighted proportion of patients in each arm, this corresponded to approximately 388 patients treated with adalimumab and 203 treated with placebo. Matching adjustment reduced the rate of PASI 75 response by approximately one-half of a percentage point in the adalimumab arm and 1 percentage point in the placebo arm of REVEAL/528, bringing the placebo arm response rate closer to that of Leonardi et al.[28] In the matching-adjusted indirect comparison, adalimumab was associated with a greater rate of PASI 75 response than etanercept, by a difference of 17.2 percentage points (p < 0.001). Matching adjustment increased the rate of PASI 90 by <1 percentage point in the adalimumab arm and reduced the rate of PASI 90 response by <1 percentage point in the placebo arm of REVEAL/528, bringing the placebo arm response rate closer to that of Leonardi et al.[28] The matching-adjusted indirect comparison indicated that adalimumab was associated with a greater rate of PASI 90 response than etanercept, by a difference of 14.8 percentage points (p < 0.001).

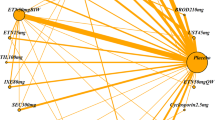

The mean percentage improvement in PASI score from baseline was compared across trials at weeks 4, 8 and 12. Prior to matching adjustment, the mean percentage improvements in PASI were greater for adalimumab in REVEAL/528 than for etanercept in Leonardi et al.[28] (51.7%, 69.0% and 75.2% vs 35%, 57% and 64.2% at weeks 4, 8 and 12, respectively). The unadjusted mean percentage improvements in PASI were also greater for the placebo arm in REVEAL/528 than the placebo arm in Leonardi et al.[28] at later weeks (9.2%, 13.8% and 15.4% vs 9.5%, 11% and 14%, at weeks 4, 8 and 12, respectively). After matching adjustment, the mean percentage improvements in PASI scores for the placebo arm of REVEAL/528 at later weeks were reduced, such that mean changes at all weeks were similar to those in the placebo arm of Leonardi et al.[28] However, the matching-adjusted mean PASI improvements for adalimumab remained significantly higher than those for etanercept (p < 0.05 for all visits; figure 1).

4. Discussion

By combining IPD for adalimumab clinical trials[27,30] and aggregate data for an etanercept trial,[28] this study provided a matching-adjusted indirect comparison of adalimumab versus etanercept for the treatment of psoriasis. Both before and after matching adjustment, adalimumab treatment was associated with substantially greater reductions in psoriasis symptoms than etanercept treatment. The novel matching method applied in this study increases the reliability of these findings by ensuring that the greater symptom reduction associated with adalimumab cannot be attributed to differences in the means or standard deviations of baseline characteristics between the adalimumab and etanercept trials.

In particular, prior to matching, the average patient in the etanercept trial had a greater percentage of their body surface area affected by psoriasis at baseline and was more likely to have received prior systemic or phototherapy than the average patient in the adalimumab trials. Placebo-treated patients also experienced slightly less symptom reduction in the etanercept trial than those in the adalimumab trials. These differences could be hypothesized to indicate greater baseline severity and potential treatment resistance among the etanercept patients, and could be argued to account for the apparent greater efficacy of adalimumab in a cross-trial comparison. However, the matching-adjusted results make this hypothesis implausible, since symptom reduction for adalimumab-treated patients remained substantially greater than for etanercept-treated patients when the baseline imbalances were eliminated and the differences in placebo arm outcomes were substantially reduced. Since the matching-adjustment re-weighted the adalimumab-treated patients to match the patients in the etanercept trial population, the estimated effect of adalimumab versus etanercept can be interpreted as an estimate of the effect that would have been observed if an adalimumab arm had been included in the etanercept trial.

Since the proposed method compares effects of adalimumab versus etanercept through their effects relative to placebo, the value of randomization in each trial is preserved to the same extent as in a standard adjusted indirect comparison.[1,5] When IPD are available for randomized trials of just one treatment, our proposed method can therefore expand the applicability of standard approaches to adjusted indirect comparison by eliminating differences in baseline characteristics that could otherwise limit their application. The present study illustrates that important differences can persist between trials even after the IPD are used to resolve differences in enrolment criteria. Meta-regression based on aggregate data has been used to adjust for differences in trial-level characteristics in indirect comparisons;[3,4] however, adjustment for trial-level effects as opposed to patient-level effects can be subject to ecological bias[10] and can require many trials to adjust for all important characteristics. When IPD are available, the matching-adjusted indirect comparisons proposed in this article can adjust for large numbers of baseline characteristics with only one trial per treatment. An indirect comparison matching IPD to aggregate data is described by Yu et al.[34] The methods described in this article improve on that application by allowing matching on any number of continuous or categorical baseline characteristics and providing a formal statistical framework for obtaining effect estimates and statistical inferences.

An advantage of the proposed matching-adjusted estimate is its robustness to model mis-specification. Even if the logistic regression model for the odds of enrolling in one trial versus the other is mis-specified, the estimated weights will guarantee that mean baseline characteristics are exactly balanced across trials. First-order confounding due to the observed patient characteristics (or any linear combination thereof) will therefore be eliminated. Matching on means and standard deviations will eliminate some second-order confounding due to any linear combination of observed characteristics and their squared values.

Although matching-adjusted indirect comparisons provide advantages by incorporating IPD, they are still subject to important limitations. The matching adjustment can balance observed patient characteristics, but there is still the potential for residual confounding due to unobserved differences between trials. The availability of randomized placebo-arm data allows some assessment of such confounding, since important differences between trials would generally be expected to affect placebo-arm outcomes. When matching adjustment reduces differences in placebo-arm outcomes between trials, and makes them nearly identical, as in the example application reported here, it appears plausible that the matching adjustment is successfully reducing the potential for bias. However, even if the placebo-arm outcomes are exactly matched, bias may still occur due to imbalance in unobserved factors that affect treatment-but not placebo-arm outcomes (e.g. through differences in compliance). Even with placebo-arm adjustments and the proposed matching, indirect comparisons are still observational studies.[8,9] Only head-to-head randomized trials can avoid these limitations.

When adjusting for confounding by re-weighting, the occurrence of extreme weights for some patients can lead to decreased statistical power to detect differences between treatments. If the extreme weights arise from a correct model for the odds of inclusion in one trial versus another, then the decreased statistical power is an honest reflection of the information available for cross-trial comparison.[35] For example, if baseline characteristics are highly divergent across trials, then some reduction in statistical power is to be expected as the price for proper adjustment. However, extreme weights that are highly sensitive to a potentially mis-specified model are a cause for concern. Unnecessarily extreme weights may also arise when attempting to balance multiple characteristics that are not important confounders, although this may be uncommon for cross-trial comparisons limited to reported baseline characteristics. The effective sample size metric described in Appendix B of the Supplemental Digital Content can help detect situations in which extreme weights may be leading to low statistical power.

Several variations and extensions of the proposed methods may be useful in practice. The present study measured the effects of drug versus placebo in terms of differences, but the methods could be easily adapted to comparisons of odds ratios or relative risks. Although the example application considered a comparison involving just three placebo-controlled trials, the methods could easily be applied to larger numbers of trials by first pooling the IPD and aggregate data for each treatment as described in the Matching Trial Populations section. Alternatively, if better matching can be achieved for particular pairs of trials (e.g. due to availability of different baseline characteristics for trials with only aggregate data), it may be advantageous to first conduct matching-adjusted indirect comparisons for these trials and then pool the resulting estimates using standard meta-analytic techniques. In settings where the aggregate data show substantial imbalances between treatment arms despite randomization, it may be appropriate to match baseline characteristics for the active treatment arms separately from the placebo arms. In settings where the IPD contain a large number of important patient characteristics that are not reported for the trials with aggregate data, partial adjustment for these factors may be attempted by first fitting a regression model for their effect on placebo-arm outcomes. Each patient’s predicted placebo-arm outcome based on this model may then be treated as a new baseline characteristic that can be matched to the mean placebo-arm outcome in the aggregate data. Although the proposed methods have been described in terms of placebo-controlled trials, they can be applied when trials employ any common comparator, including other active treatments or similarly defined standards of care. The proposed methods can also be used to compare treatments across trials without a common comparator arm, such as single-arm trials, although such comparisons could be subject to additional limitations.

This study has compared treatments in terms of dichotomous and continuous efficacy outcomes. In general, the estimated weights used to balance baseline characteristics can provide a matching-adjusted indirect comparison of any outcome measure across trials, provided it is available from the aggregate data (along with standard errors or other suitable measures of statistical uncertainty). The weights for the IPD can be incorporated into standard statistical procedures to compare, for example, average or median improvements in a continuous symptom score, regression-adjusted treatment effects (e.g. analysis of co-variance [ANCOVA] or least squares means), distributions of categorical outcomes, hazard ratios or full Kaplan-Meier curves for censored time-to-event outcomes, safety or quality-of-life outcomes, or composite outcomes such as the incremental cost-effectiveness ratio or the number needed to treat.

5. Conclusions

In the absence of a head-to-head randomized trial, this study matched the means and standard deviations of key baseline characteristics between trials of adalimumab and etanercept for the treatment of psoriasis. The matching leveraged all available data, including IPD for the adalimumab trials and published data for the etanercept trial. When treatment outcomes were compared across the matched trials, adalimumab was associated with significantly greater efficacy than etanercept for the reduction of psoriasis symptoms.

Indirect treatment comparisons can provide timely comparative evidence with relatively little expense, but it is essential to avoid biases that can arise from cross-trial differences.[5–9] The methods introduced in this article provide one approach to adjusting for cross-trial differences by leveraging IPD. Additional methods for leveraging IPD are possible, and empirical and simulation studies comparing alternative methods will be valuable. We are currently implementing analyses in which the reliability of matching-adjusted indirect comparisons will be compared with methods based only on aggregate data and methods based on full IPD, with validation against head-to-head trials.

While head-to-head randomized trials will remain the gold standard for CER, they require substantial time and resources, and will not be readily available for all treatment comparisons. Reliable methods for indirect treatment comparison will be valuable for filling evidence gaps and prioritizing treatment comparisons for investment in head-to-head trials.

References

Bucher HC, Guyatt GH, Griffith LE, et al. The results of direct and indirect treatment comparisons in meta-analysis of randomized controlled trials. J Clin Epidemiol 1997; 50: 683–91

Lumley T. Network meta-analysis for indirect treatment comparisons. Stat Med 2002; 21: 2313–24

Eckert L, Falissard B. Using meta-regression in performing indirect-comparisons: comparing escitalopram with venlafaxine XR. Curr Med Res Opin 2006; 22 (11): 2313–21

Nixon RM, Bansback N, Brennan A. Using mixed treatment comparisons and meta-regression to perform indirect comparisons to estimate the efficacy of biologic treatments in rheumatoid arthritis. Stat Med 2007; 26: 1237–54

Glenny AM, Altman DG, Song F, et al. Indirect comparisons of competing interventions. Health Technol Assess 2005; 9 (26): 1–134

Sutton A, Ades AE, Cooper N, et al. Use of indirect and mixed treatment comparisons for technology assessment. Pharmacoeconomics 2008; 26: 753–67

Phillips A. Trial and error: cross-trial comparisons of antiretroviral regimens. AIDS 2003; 17 (4): 619–23

Cranney A, Guyatt G, Griffith L, et al. Meta-analyses of therapies for postmenopausal osteoporosis: IX. Summary of meta-analyses of therapies for postmenopausal osteoporosis. Endocr Rev 2002; 23 (4): 570–8

Deeks JJ, Higgins JPT, Altman DG, et al. Analysing and presenting results. In: Higgins JPT, Green S, editors. Cochrane handbook for systematic reviews of interventions 4.2.6 [updated 2006 Sep]; Section 8. Chichester: JohnWiley & Sons, Ltd, 2006 [online]. Available from URL: http://www.cochrane.org/sites/default/files/uploads/Handbook4.2.6Sep2006.pdf [Accessed 2010 Jun 23]

Berlin JA, Santanna J, Schmid CH, et al. Individual patientversus group-level data meta-regressions for the investigation of treatment effect modifiers: ecological bias rears its ugly head. Stat Med 2002; 21 (3): 371–87

Pignon JP, Arriagada R, Ihde DC, et al. A meta-analysis of thoracic radiotherapy for small-cell lung cancer. N Engl J Med 1992; 327: 1618–24

Homocysteine Lowering Trialists’ Collaboration. Lowering blood homocysteine with folic acid based supplements: meta-analysis of randomised trials. BMJ 1998; 316: 894–8

Non-small Cell Lung Cancer Collaborative Group. Chemotherapy in non-small cell lung cancer; a meta-analysis using updated data on individual patients from 52 randomised clinical trials. BMJ 1995; 311: 899–909

Moore RA, McQuay HJ. Single-patient data metaanalysis of 3453 postoperative patients: oral tramadol versus placebo, codeine and combination analgesics. Pain 1997; 69: 287–94

Turner RM, Omar RZ, Yang M, et al. A multilevel model framework for meta-analysis of clinical trials with binary outcomes. Stat Med 2000; 19: 3417–32

Ross SD. Trends inmeta-analysis. Drug Inf J 2009; 43: 171–6

Sutton A, Higgins J. Recent developments in meta-analysis. Stat Med 2008; 27: 625–50

Neumann PJ, Drummond MF, JÖ nsson B, et al. Are key principles for improved health technology assessment supported and used by health technology assessment organizations? Int J Technol Assess Health Care 2010; 26 (1): 71–8

Sutton A, Kendrick D, Coupland CA. Meta-analysis of individual-and aggregate-level data. Stat Med 2008; 27 (5): 651–69

Riley RD, Lambert PC, Staessen JA, et al. Meta-analysis of continuous outcomes combining individual patient data and aggregate data. Stat Med 2008; 27 (11): 1870–93

Riley RD, Simmonds MC, Look MP. Evidence synthesis combining individual patient data and aggregate data: a systematic review identified current practice and possible methods. J Clin Epidemiol 2007; 60 (5): 431–9

National Psoriasis Foundation. Benchmark survey on psoriasis and psoriatic arthritis: summary of top-line results [online]. Available from URL: http://www.webcitation.org/query.php?url=http://www.psoriasis.org/files/pdfs/press/npfsurvey.pdf&refdoi=10.1186/1477-7525-4-35 [Accessed 2010 Jun 23]

Stern RS, Nijsten T, Feldman SR, et al. Psoriasis is common, carries a substantial burden even when not extensive, and is associated with widespread treatment dissatisfaction. J Investig Dermatol Symp Proc 2004; 9: 136–9

Gottlieb AB, Chao C, Dann F. Psoriasis comorbidities. J Dermatolog Treat 2008; 19: 5–21

Kimball AB, Jacobson C, Weiss S, et al. The psychosocial burden of psoriasis. Am J Clin Dermatol 2005; 6: 383–92

Saurat JH, Stingl G, Dubertret L, et al. Efficacy and safety results from the randomized controlled comparative study of adalimumab vs methotrexate vs placebo in patients with psoriasis (CHAMPION). Br J Dermatol 2008; 158: 558–66

Menter A, Tyring SK, Gordon K, et al. Adalimumab therapy for moderate to severe psoriasis: a randomized, controlled phase III trial. J Am Acad Dermatol 2008; 58: 106–15

Leonardi CL, Powers JL, Matheson RT, et al. Etanercept as monotherapy in patients with psoriasis. N Engl J Med 2003; 349 (21): 2014–22

Papp KA, Tyring S, Lahfa M, et al. A global phase III randomized controlled trial of etanercept in psoriasis: safety, efficacy, and effect of dose reduction. Br J Dermatol 2005; 152: 1304–12

Gordon KB, Langley RG, Leonardi C, et al. Clinical response to adalimumab treatment in patients with moderate to severe psoriasis: double-blind, randomized controlled trial and open-label extension study. J Am Acad Dermatol 2006; 55: 598–606

Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet 2001; 57 (9263): 1191–4

Hirano K, Imbens GW, Ridder G. Efficient estimation of average treatment effects using the estimated propensity score. Econometrica 2003; 71 (4): 1161–89

Rosenbaum P, Rubin D. Reducing bias in observational studies using subclassification on the propensity score. J Am Stat Assoc 1984; 79 (387): 516–24

Yu A, Johnson S, Wang S-T, et al. Cost utility of adalimumab versus infliximab maintenance therapies in the United States for moderately to severely active Crohn’s disease. Pharmacoeconomics 2009; 27 (7): 609–21

Stuart EA. Matching methods for causal inference: a review and a look forward. Stat Sci [online]. Available from URL: http://www.e-publications.org/ims/submission/index.php/STS/user/submissionFile/482?confirm=92cac221 [Accessed 23 Jun 2010]

Acknowledgements

This study was supported by Abbott Laboratories, Inc. and Analysis Group, Inc. Editorial support was provided by Ellen Stoltzfus, PhD, of JK Associates. JES, EQW, APY and EK are employees of Analysis Group, Inc., which received funding for this research from Abbott Laboratories. CMG, YB, SRG and PMM are employees and shareholders of Abbott Laboratories.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Signorovitch, J.E., Wu, E.Q., Yu, A.P. et al. Comparative Effectiveness Without Head-to-Head Trials. Pharmacoeconomics 28, 935–945 (2010). https://doi.org/10.2165/11538370-000000000-00000

Published:

Issue Date:

DOI: https://doi.org/10.2165/11538370-000000000-00000